My recent work is about artificial intelligence (AI) safety research. I worked until the project was finished and also did some data labeling tasks.

I conducted some AI safety tests on new models like ChatGPT, Gemini, and Meta on my spare of time.

I found that Gemini had a problem with its AI explicit content filter. I sent them feedback and explained step by step how the bug works.

There are so many films about AI. We often expect AI to look and act like humans. But from my point of view, AI will never truly think like a human.

Humans have a complex pattern of thinking. The hardest thing to replicate from humans is subjectivity.

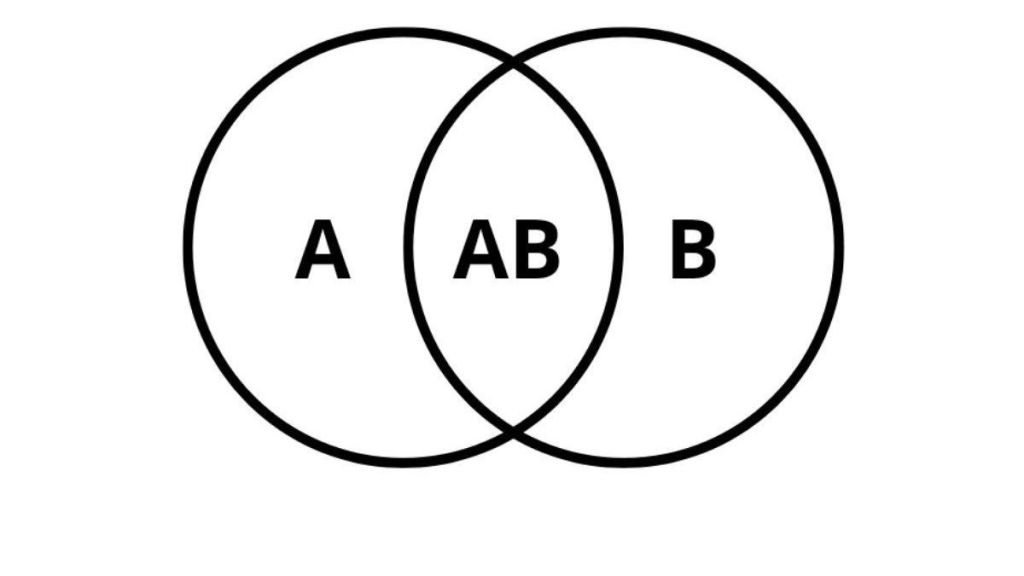

AI will produce an output in either A or B — there’s no AB. Meanwhile, human logic depends on experience and environment.

That’s why human decisions vary, and there’s always a gray area between A and B — the “AB.”

If AI companies try to replicate this to improve their models, it could lead to destructivism. We don’t know what might happen if AI gains the subjectivity of humans.

With human-like subjectivity, the possibility of AI choosing the wrong output becomes much higher. It could act in favor of certain people or entities, leading to biased or unpredictable behavior.

Leave a comment